We (John Azariah and Mahesh Krishnan) gave a talk at Tech Ed Australia this year titled Casablanca: C++ on Azure. The talk itself was slotted in at 8:15 am on the last day of Tech.Ed after a long party the night before. The crowd was small, and although we were initially disappointed by the turn out, we took heart in the fact that this was the most viewed online video at Tech.Ed this year – lots of five star ratings, Facebook likes and tweets. This post gives you an introduction to Casablanca and highlights the things we talked about in the Tech.Ed presentation.

So, what is Casablanca? Casablanca is an incubation effort from Microsoft with the aim of providing an option for people to run C++ on Windows Azure. Until now, if you were a C++ programmer, the easiest option for you to use C++ would be to create a library and then P/Invoke it from C# or VB.NET code. Casablanca gives you an option to do away with things like that.

If you are a C++ developer and want to move your code to Azure right away, all we can say is “Hold your horses!” It is, like we said, an incubation effort and not production ready, yet. But you can download it from the Devlabs site, play with it and provide valuable feedback to the product team.

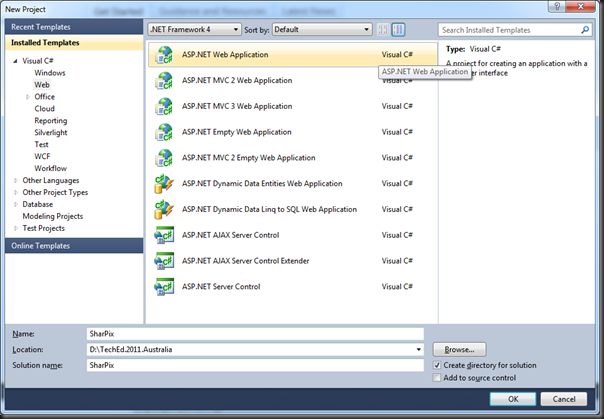

You are also probably thinking, “Why use C++?” The answer to that question is really “Why not?” Microsoft has been providing developers the option to use various other languages/platforms such as java and Node.js to write for Azure, and now they are giving the same option to C++ programmers – use the language of their choice to write applications in Azure. Although there has been a bit of resurgence in C++ in the last couple of years, we are not really trying to sell C++ to you. If we are writing a Web App that talks to a database, then our first choice would probably still be ASP.NET MVC using C#, and maybe Entity Frameworks to talk to the DB. What we are trying to say is that you still need to use the right language and framework that works best for you, and if C# is the language you are comfortable with, then why change.

On the other hand if you are using C++, then you probably already know why you want to continue using it. You may be using it for cross-platform compatibility or better performance or maybe you have lots of existing legacy code that you can’t be bothered porting across. Whatever the reason, Casablanca gives you an option to bring your C++ code to Azure without having to use another language to talk to its libraries.

The Node influence

When you first start to look at Casablanca code, you will notice how some of the code has some resemblance to Node.js. A simple Hello World example in node will look like this -

var http = require('http');

http.createServer(function (request, response) {

response.writeHead(200,

{'Content-Type': 'text/plain'});

respose.end('Hello World!');

}).listen(8080, '127.0.0.1');

The equivalent Hello World in C++ would look something like this -

using namespace http;

http_listener::create("http://127.0.0.1:8080/",

[](http_request request)

{

return request.reply(status_codes::OK,

"text/plain", "Hello World!");

}).listen();

Notice the similarity? This isn’t by accident. The Casablanca team has been influenced a fair bit by Node and the simplicity by which you can code in node.

Other inclusions

The proliferation of HTML, Web servers, web pages and the various languages to write web applications based on HTML happened in the 90s. C++ may have been around a lot longer than that, but surprisingly, it didn’t ride the HTML wave. Web servers were probably written in C++, but the applications themselves were written using much simpler languages like PHP. Of course, we did have CGI, which you could write using C++, and there were scores of web applications written in C++ but somehow, it really wasn’t the language of choice for writing them. (It didn’t help that scores of C++ developers moved on to things like Java, C#, and Ruby). What C++ needed was a good library or SDK to work with HTTP requests, and process them.

In addition to this, RESTful applications are becoming common place, and is increasingly becoming the preferred way to write services. So, the ability to easily process GET, PUT, POST and DELETE requests in C++ was also needed.

When we talk about RESTful apps, we also need to talk about the format in which the data is sent to/from the server. JSON seems to be the format of choice these days due to the ease with which it works with Javascript.

The Casablanca team took these things into consideration and added classes into Casablanca to work with the HTTP protocol, easily create RESTful apps and work with JSON.

To process the different HTTP actions and write a simple REST application to do CRUD operations, the code will look something like this:

auto listener = http_listener::create(L"http://localhost:8082/books/");

listener.support(http::methods::GET, [=](http_request request)

{

//Read records from DB and send data back

});

listener.support(http::methods::POST, [=](http_request request)

{

//Create record from data sent in Request body

});

listener.support(http::methods::PUT, [=](http_request request)

{

//Update record based on data sent in Request body

});

listener.support(http::methods::DEL, [=](http_request request)

{

//Delete

});

/* Prevent Listen() from returning until user hits 'Enter' */

listener.listen([]() { fgetc(stdin); }).wait();

Notice how easy it is to process the individual HTTP actions? So, how does conversion from and to Json objects work? To convert a C++ object to a Json object and send it back as a response, the code will look something like this:

using namespace http::json;

...

value jsonObj = value::object();

jsonObj[L"Isbn"] = value::string(isbn);

jsonObj[L"Title"] = value::string(title);

...

request.reply(http::status_codes::OK, jsonObj);

To read json data from the request, the code will look something like this:

using namespace http::json;

...

value jsonValue = request.extract_json().get();

isbn = jsonValue[L"Isbn"].as_string();

You have a collection? no problem, the following code snippet shows how you can create a Json array

...

auto elements = http::json::value::element_vector();

for (auto i = mymap.begin(); i != mymap.end(); ++i)

{

T t = *i;

auto jsonOfT = ...; // Convert t to http::json::value

elements.insert(elements.end(), jsonOfT);

}

return http::json::value::array(elements);

Azure Storage

If you are running your application in Windows Azure, then chances are you may also want to use Azure storage. Casablanca provides you with the libraries to be able to do this. The usage, again is quite simple, to create the various clients for blobs, queues and tables the usage is as follow:

storage_credentials creds(storageName, storageKey);

cloud_table_client table_client(tableUrl, creds);

cloud_blob_client blob_client(blobUrl, creds);

cloud_queue_client queue_client(queueUrl, creds);

Notice the consistent way of creating the various client objects. Once you have initialized them, then their usage is quite simple too. The following code snippet shows you how to read data from Table storage:

cloud_table table(table_client, tableName);

query_params params;

...

auto results = table.query_entities(params)

.get().results();

for (auto i = results.begin();

i != result_vector.end(); ++i)

{

cloud_table_entity entity = *i;

entity.match_property(L"ISBN", isbn);

...

}

Writing to Table storage is not difficult either, as seen in this code snippet:

cloud_table table(table_client, table_name);

cloud_table_entity entity(partitionKey, rowKey);

entity.set(L"ISBN", isbn, cloud_table_entity::String);

...

cloud_table.insert_or_replace_entity(entity);

Writing to blobs, and queues follow a similar pattern of usage.

Async…

nother one of the main inclusions in Casablanca is the ability to do things in an asynchronous fashion. If you’ve looked at the way things are done on Windows Store applications or used Parallel Patterns Library (PPL), then you would already be familiar with the “promise” syntax. In the previous code snippets, we resisted the urge to use it, as we hadn’t introduced it yet.

… and Client-Side Libraries

Also, we have been talking mainly about the server side use of Casablanca, but another thing to highlight is the fact that it can also be used to do client side programming. The following code shows the client side use of Casablanca and how promises can be used:

http::client::http_client client(L"http://someurl/");

client.request(methods::GET, L"/foo.html")

.then(

[=](pplx::task task)

{

http_response response = task.get();

//Do something with response

...

});

If you need to find out more about ppl and promises, then you should read the article “Asynchronous Programming in C++” written by Artur Laksberg in the MSDN magazine.

Wait, there is more…but first lets get started

Casablanca has also been influenced by Erlang, and the concept of Actors, but let’s talk about it another post. To get started with Casablanca, download it from the DevLabs site. It is available for both VS 2010 and 2012.